Research

Below are short descriptions of research projects that I've had the pleasure of working on.

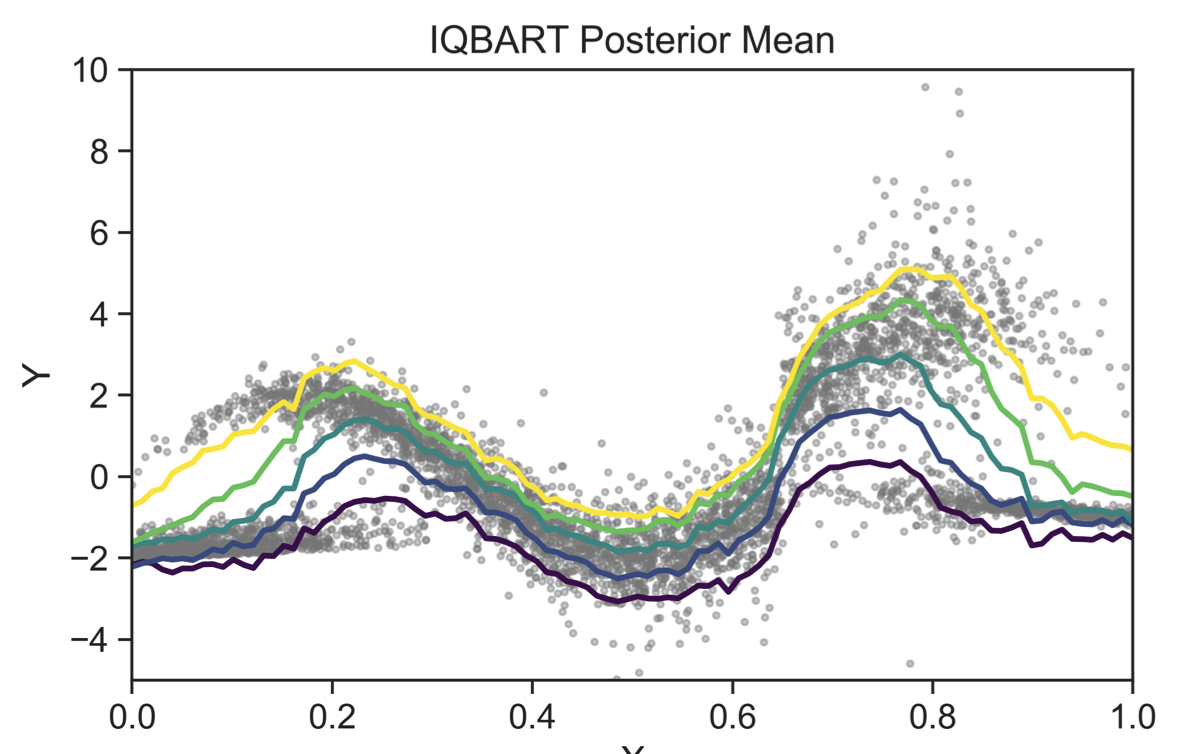

Generative Regression with IQ-BART

IQ-BART is a generalized Bayesian inference procedure targeting the conditional quantile function as the parameter of interest. We use a sum-of-trees prior over the conditional quantile function. The IQ-BART posterior allows for uncertainty quantification about a nonparametric conditional model, separating aleatoric and epistemic uncertainty in the response variable.

Revision submitted at JASA.

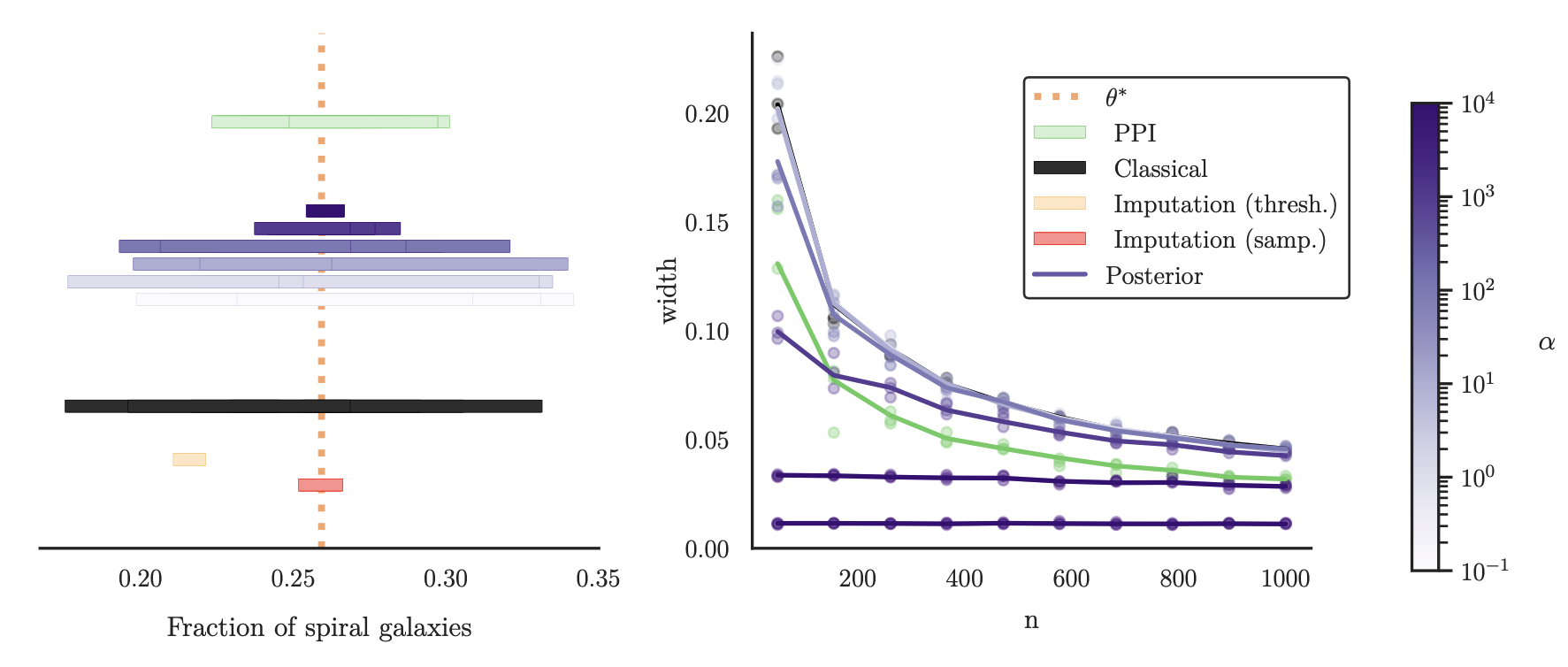

AI-Powered Bayesian Inference

We develop a framework to leverage predictive systems (i.e. large language models) to elicit informative priors for Bayesian statistical analyses on risk minimizers. This can result in significantly smaller credible intervals when the predictions are good. We also describe a calibration method to tune the influence of the learned prior.

Under review.

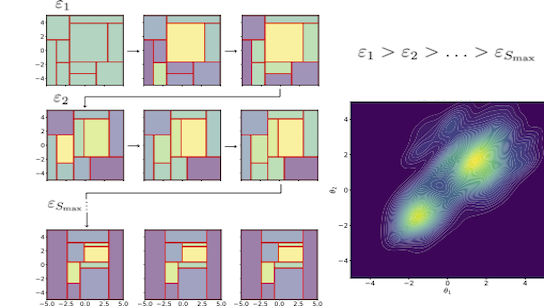

Tree Bandits for Generative Bayes

We develop a self-aware framework for likelihood-free Bayesian inference that learns from past trials and errors. We apply recursive partitioning classifiers on the ABC lookup table to sequentially refine high-likelihood regions into boxes, each of which is regarded as an arm in a binary bandit problem treating ABC acceptance as a reward.

Revision submitted at JASA.

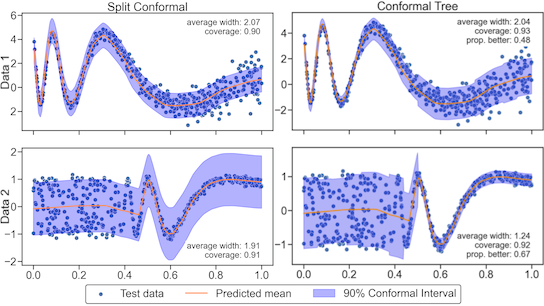

Adaptive Uncertainty Quantification for Generative AI

Modern deep learning paradigms function as a black-box and do not expose their training data to the end user. We develop a conformal prediction method that adapts based on the calibration data directly, circumventing the need for further data splitting or access of the training data.

Under review.

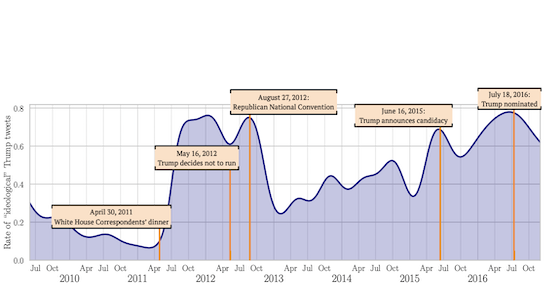

Measurement in the Age of LLMs

Much of social science is centered around terms like "ideology" or "power", which generally elude precise definition, and whose contextual meanings are trapped in surrounding language. We employ LLMs to flexibly navigate the conceptual clutter inherent to social scientific measurement tasks. We elicit ideological scales of both legislators and text, which accord closely to established methods and our own judgement.

Revision in progress.